Whittle--Matérn fields with general smoothness

David Bolin, Alexandre B. Simas

Created: 2022-11-23. Last modified: 2025-05-21.

Source:vignettes/fem_models.Rmd

fem_models.RmdIntroduction

In this vignette we will introduce how to fit Whittle–Matérn fields

with general smoothness based on finite element and rational

approximations. The theory for this approach is provided in Bolin et

al. (2023) and Bolin, Simas, and Xiong (2023). For the

implementation, we make use of the rSPDE

package for the rational approximations.

These models are thus implemented using finite element approximations. Such approximations are not needed for integer smoothness parameters, and for the details about the exact models we refer to the vignettes

For details on the construction of metric graphs, see Working with metric graphs

For further details on data manipulation on metric graphs, see Data manipulation on metric graphs

Constructing the graph and the mesh

We begin by loading the rSPDE and

MetricGraph packages:

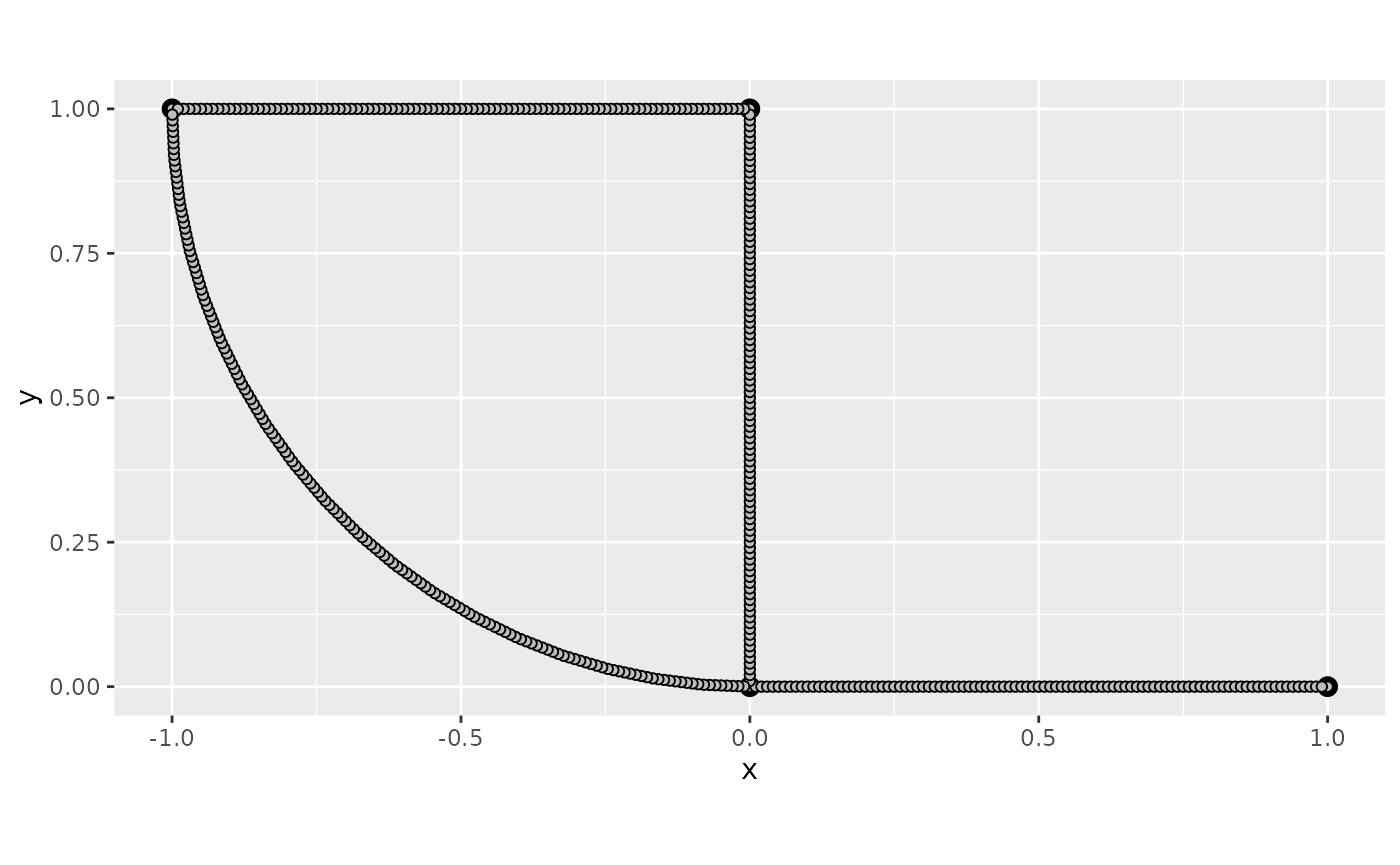

As an example, we consider the following metric graph

edge1 <- rbind(c(0,0),c(1,0))

edge2 <- rbind(c(0,0),c(0,1))

edge3 <- rbind(c(0,1),c(-1,1))

theta <- seq(from=pi,to=3*pi/2,length.out = 20)

edge4 <- cbind(sin(theta),1+ cos(theta))

edges = list(edge1, edge2, edge3, edge4)

graph <- metric_graph$new(edges = edges)

graph$plot()

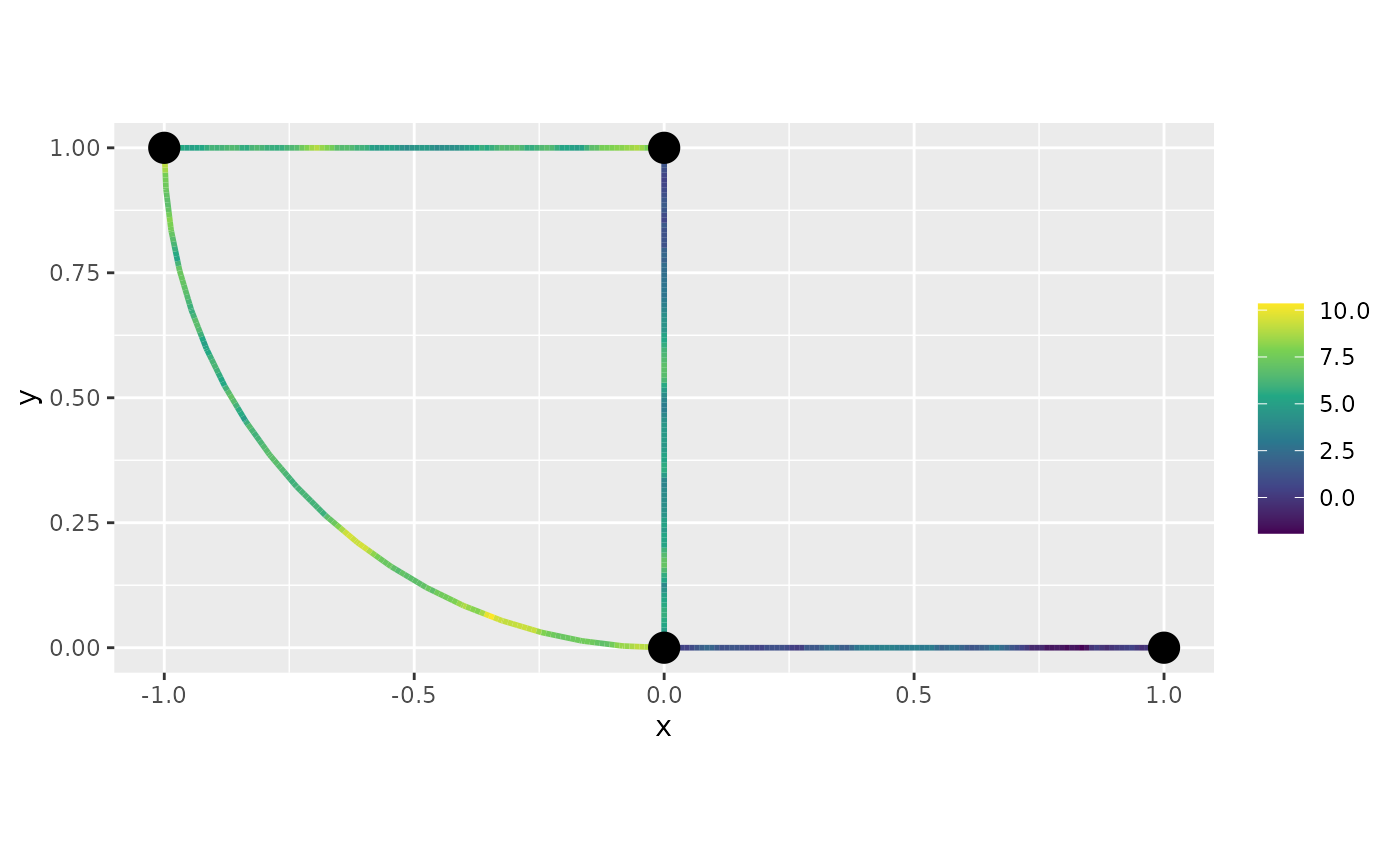

To construct a FEM approximation of a Whittle–Matérn field with general smoothness, we must first construct a mesh on the graph.

graph$build_mesh(h = 0.1)

graph$plot(mesh=TRUE)

In the command build_mesh, the argument h

decides the largest spacing between nodes in the mesh. As can be seen in

the plot, the mesh is very coarse, so let’s reduce the value of

h and rebuild the mesh:

graph$build_mesh(h = 0.01)We are now ready to specify the model

for the Whittle–Matérn field

.

For this, we use the matern.operators function from the

rSPDE package:

sigma <- 1.3

range <- 0.15

nu <- 0.8

rspde.order <- 2

op <- matern.operators(nu = nu, range = range, sigma = sigma,

parameterization = "matern",

m = rspde.order, graph = graph) As can be seen in the code, we specify

via the practical correlation range

.

Also, the model is not parametrized by

but instead by

.

Here, sigma denotes the standard deviation of the field and

nu is the smoothness parameter, which is related to

via the relation

.

The object op contains the matrices needed for evaluating

the distribution of the stochastic weights in the FEM approximation.

Let us simulate the field at the mesh locations and plot the result:

u <- simulate(op)

graph$plot_function(X = u, type = "plotly")If we want to evaluate

at some locations

,

we need to multiply the weights with the FEM basis functions

evaluated at the locations. For this, we can construct the observation

matrix

,

with elements

,

which links the FEM basis functions to the locations. This can be done

by the function fem_basis in the metric graph object. To

illustrate this, let us simulate some observation locations on the graph

and construct the matrix:

obs.per.edge <- 100

obs.loc <- NULL

for(i in 1:graph$nE) {

obs.loc <- rbind(obs.loc,

cbind(rep(i,obs.per.edge), runif(obs.per.edge)))

}

n.obs <- obs.per.edge*graph$nE

A <- graph$fem_basis(obs.loc)In the code, we generate observation locations per edge in the graph, drawn at random. It can be noted that we assume that the observation locations are given in the format where denotes the edge of the observation and is the position on the edge, i.e., the relative distance from the first vertex of the edge.

To compute the precision matrix from the covariance-based rational

approximation one can use the precision() method on object

returned by the matern.operators() function:

Q <- precision(op)As an illustration of the model, let us compute the covariance

function between the process at

,

that is, the point at edge 2 and distance on edge 0.1, and all the other

mesh points. To this end, we can use the helper function

cov_function_mesh that is contained in the op

object:

Using the model for inference

There is built-in support for computing log-likelihood functions and

performing kriging prediction in the rSPDE package which we

can use for the graph model. To illustrate this, we use the simulation

to create some noisy observations of the process. We generate the

observations as

,

where

is Gaussian measurement noise,

and

are covariates generated the relative positions of the observations on

the graph.

sigma.e <- 0.1

x1 <- obs.loc[,1]

x2 <- obs.loc[,2]

Y <- 1 + 2*x1 - 3*x2 + as.vector(A %*% u + sigma.e * rnorm(n.obs))Let us now fit the model. To this end we will use the

graph_lme() function (that, for the finite element models,

acts as a wrapper for the rspde_lme() function from the

rSPDE package). To this end, let us now assemble the

data.frame() with the observations, the observation

locations and the covariates:

df_data <- data.frame(y = Y, edge_number = obs.loc[,1],

distance_on_edge = obs.loc[,2],

x1 = x1, x2 = x2)Let us now add the data to the graph object and plot it:

graph$add_observations(data = df_data, normalized = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.

graph$plot(data = "y")

We can now fit the model. To this end, we use the

graph_lme() function and set the model to

'WM’.

fit <- graph_lme(y ~ x1 + x2, graph = graph, model = "WM")Let us obtain a summary of the model:

summary(fit)##

## Latent model - Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ x1 + x2, graph = graph, model = "WM")

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 0.2642 0.7095 0.372 0.7096

## x1 2.1528 0.1994 10.794 <2e-16 ***

## x2 -2.2051 0.7065 -3.121 0.0018 **

##

## Random effects:

## Estimate Std.error z-value

## alpha 1.275691 0.018579 68.663

## tau 0.053580 0.004974 10.772

## kappa 16.014788 2.575069 6.219

##

## Random effects (Matern parameterization):

## Estimate Std.error z-value

## nu 0.77569 0.01858 41.751

## sigma 1.32631 0.13560 9.781

## range 0.15555 0.02382 6.531

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 0.097310 0.006314 15.41

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -126.5447

## Number of function calls by 'optim' = 101

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 13.70209 secsAn improved estimate of the Hessian can be obtained by setting

improve_hessian to TRUE, which improves the

precision of the standard errors.

fit <- graph_lme(y ~ x1 + x2, graph = graph, model = "WM", improve_hessian = TRUE)Let us obtain a summary of the model fitted with the improved Hessian:

summary(fit)##

## Latent model - Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ x1 + x2, graph = graph, model = "WM",

## improve_hessian = TRUE)

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 0.2642 0.7102 0.372 0.7099

## x1 2.1528 0.1998 10.773 <2e-16 ***

## x2 -2.2051 0.7065 -3.121 0.0018 **

##

## Random effects:

## Estimate Std.error z-value

## alpha 1.27569 0.03975 32.095

## tau 0.05358 0.01044 5.132

## kappa 16.01479 2.88084 5.559

##

## Random effects (Matern parameterization):

## Estimate Std.error z-value

## nu 0.77569 0.03975 19.515

## sigma 1.32631 0.13560 9.781

## range 0.15555 0.02382 6.531

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 0.097310 0.006318 15.4

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -126.5447

## Number of function calls by 'optim' = 101

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 12.09159 secs

## compute the Hessian = 2.18017 secsWe can also obtain additional information by using the function

glance():

glance(fit)## # A tibble: 1 × 9

## nobs sigma logLik AIC BIC deviance df.residual model alpha

## <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <dbl>

## 1 400 0.0973 -127. 267. 295. 253. 393 Covariance-Based M… 1.28Let us compare the values of the parameters of the latent model with the true ones:

print(data.frame(sigma = c(sigma, fit$alt_par_coeff$coeff["sigma"]),

range = c(range, fit$alt_par_coeff$coeff["range"]),

nu = c(nu, fit$alt_par_coeff$coeff["nu"]),

row.names = c("Truth", "Estimates")))## sigma range nu

## Truth 1.300000 0.1500000 0.8000000

## Estimates 1.326307 0.1555493 0.7756907Kriging

Given that we have estimated the parameters, let us compute the kriging predictor of the field given the observations at the mesh nodes.

We will perform kriging with the predict() method. To

this end, we need to provide a data.frame containing the

prediction locations, as well as the values of the covariates at the

prediction locations.

df_pred <- data.frame(edge_number = graph$mesh$VtE[,1],

distance_on_edge = graph$mesh$VtE[,2],

x1 = graph$mesh$VtE[,1],

x2 = graph$mesh$VtE[,2])

u.krig <- predict(fit, newdata = df_pred, normalized = TRUE)The estimate is shown in the following figure

graph$plot_function(as.vector(u.krig$mean))

We can also use the augment() function to easily plot

the predictions. Let us a build a 3d plot now and add the observed

values on top of the predictions:

Further details on graph_lme

The graph_lme function provides flexibility in model

fitting by allowing users to fix certain parameters at specific values

or set custom starting values for the optimization process. This can be

useful when you have prior knowledge about some parameters or when you

want to improve convergence by providing better starting points.

Fixing parameters

Parameters can be fixed by using the model_options

argument with elements of the form fix_parname = value,

where parname is the name of the parameter you want to fix.

The parameters that can be fixed in the model_options list

are:

-

fix_sigma_e: Fix the standard deviation of the noise parameter -

fix_sigma: Fix the standard deviation parameter σ -

fix_range: Fix the range parameter -

fix_alpha: Fix the fractional power α

Setting starting values

Similarly, you can set starting values for parameters using elements

of the form start_parname = value in the

model_options list. This is particularly useful when the

default starting values might be far from the optimal values, which

could lead to slow convergence or convergence to a local minimum.

-

start_sigma_e: Starting value for the standard deviation of the noise parameter -

start_sigma: Starting value for the standard deviation parameter σ -

start_range: Starting value for the range parameter -

start_alpha: Starting value for the fractional power α

Example: Fixing and setting starting values

Let us demonstrate how to use these parameters with a graph model example. We will fix the standard deviation σ to 1 and set the starting value for the range parameter to 0.5, on the previous example.

# Fit model with fixed sigma and start_range

fit_fixed <- graph_lme(y ~ x1 + x2, graph = graph, model = "WM",

model_options = list(

fix_sigma = 1, # Fix sigma to 1

start_range = 0.5 # Set starting value for range

))

# Print summary of the model

summary(fit_fixed)##

## Latent model - Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ x1 + x2, graph = graph, model = "WM",

## model_options = list(fix_sigma = 1, start_range = 0.5))

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 0.5825 0.4770 1.221 0.222

## x1 2.0676 0.1348 15.340 < 2e-16 ***

## x2 -2.4141 0.5096 -4.737 2.17e-06 ***

##

## Random effects:

## Estimate Std.error z-value

## nu 0.692486 0.010335 67.00

## sigma (fixed) 1.000000 NA NA

## range 0.117989 0.008994 13.12

##

## Random effects (SPDE parameterization):

## Estimate Std.error z-value

## alpha 1.19249 0.01033 115.4

## tau 0.07975 NA NA

## kappa 19.94839 NA NA

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 0.09719 0.00630 15.43

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -132.3039

## Number of function calls by 'optim' = 77

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 9.4444 secs## Log-likelihood with fixed sigma: -132.3039## Log-likelihood with all parameters estimated: -126.5447Fitting a model with replicates

Let us now illustrate how to simulate a data set with replicates and

then fit a model to such data. To simulate a latent model with

replicates, all we do is set the nsim argument to the

number of replicates.

n.rep <- 30

u.rep <- simulate(op, nsim = n.rep)Now, let us generate the observed values :

Note that

is a matrix with 20 columns, each column containing one replicate. We

need to turn y into a vector and create an auxiliary vector

repl indexing the replicates of y:

y_vec <- as.vector(Y.rep)

repl <- rep(1:n.rep, each = n.obs)

df_data_repl <- data.frame(y = y_vec,

edge_number = rep(obs.loc[,1], n.rep),

distance_on_edge = rep(obs.loc[,2], n.rep),

repl = repl)Let us clear the previous observations and add the new data to the graph:

graph$add_observations(data = df_data_repl, normalized = TRUE,

group = "repl", clear_obs = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.We can now fit the model in the same way as before by using the

rspde_lme() function. Note that we can optimize in parallel

by setting parallel to TRUE. If we do not

specify which replicate to consider, in the which_repl

argument, all replicates will be considered.

fit_repl <- graph_lme(y ~ -1, graph = graph, model = "WM", parallel = TRUE)Now, let us see a summary of the fit:

summary(fit_repl)##

## Latent model - Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ -1, graph = graph, model = "WM", parallel = TRUE)##

## No fixed effects.##

## Random effects:

## Estimate Std.error z-value

## alpha 1.256284 0.007876 159.51

## tau 0.060200 0.002002 30.07

## kappa 15.060866 0.506184 29.75

##

## Random effects (Matern parameterization):

## Estimate Std.error z-value

## nu 0.756284 0.007876 96.03

## sigma 1.315602 0.025025 52.57

## range 0.163319 0.005222 31.27

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 0.300303 0.002993 100.3

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -9838.161

## Number of function calls by 'optim' = 58

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 7.51883 secs

## set up the parallelization = 13.63897 secsLet us also take a glance of the fit:

glance(fit_repl)## # A tibble: 1 × 9

## nobs sigma logLik AIC BIC deviance df.residual model alpha

## <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <dbl>

## 1 12000 0.300 -9838. 19684. 19714. 19676. 11996 Covariance-Based … 1.26Let us compare the values of the parameters of the latent model with the true ones:

print(data.frame(sigma = c(sigma, fit_repl$alt_par_coeff$coeff["sigma"]),

range = c(range, fit_repl$alt_par_coeff$coeff["range"]),

nu = c(nu, fit_repl$alt_par_coeff$coeff["nu"]),

row.names = c("Truth", "Estimates")))## sigma range nu

## Truth 1.300000 0.1500000 0.8000000

## Estimates 1.315602 0.1633193 0.7562836Let us do kriging. We will use the same prediction locations as in the previous example. Let us get prediction for replicate 10, then add the original observations on top of them:

Using the R-INLA implementation

We also have an R-INLA implementation of the rational

SPDE approach for metric graphs.

We begin by defining the model by using the

rspde.metric_graph() function. This function contains the

same arguments as the function rspde.matern(). We refer the

reader to the R-INLA

implementation of the rational SPDE approach vignette for further

details.

We begin by clearing the previous observations and adding the observations (for the case without replicates) to the graph:

graph$clear_observations()

graph$add_observations(data = df_data, normalized = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.Let us create the model object:

library(INLA)

rspde_model <- rspde.metric_graph(graph)By default, the order of the rational approximation is 2.

We can now create the auxiliary quantities that will be needed with

the graph_data_rspde() function:

data_rspde <- graph_data_rspde(rspde_model, name = "field")The remaining is standard: we create the formula object, the stack

object, and then fit the model by using the inla()

function. So, first we create the formula object:

f.s <- y ~ -1 + Intercept + x1 + x2 + f(field, model = rspde_model)Now we create the inla.stack object. To such an end,

observe that data_rspde contains the dataset as the

data component, the index as the index

component and the so-called A matrix as the

basis component. We will now create the stack using these

components:

stk.dat <- inla.stack(

data = data_rspde[["data"]]["y"], A = list(data_rspde[["basis"]],1), tag = "est",

effects =

list(c(

data_rspde[["index"]],

list(Intercept = 1)), list(x1 = data_rspde[["data"]]["x1"] ,

x2 = data_rspde[["data"]]["x2"])

)

)Finally, we can fit the model:

rspde_fit <- inla(f.s, data = inla.stack.data(stk.dat),

control.inla = list(int.strategy = "eb"),

control.predictor = list(A = inla.stack.A(stk.dat), compute = TRUE),

num.threads = "1:1"

)We can use the same functions as the rspde fitted models

in inla. For instance, we can see the results in the

original scale by creating the result object:

result_fit <- rspde.result(rspde_fit, "field", rspde_model)

summary(result_fit)## mean sd 0.025quant 0.5quant 0.975quant mode

## std.dev 1.390840 0.1512880 1.122280 1.380590 1.715770 1.357940

## range 0.182596 0.0484043 0.106954 0.175956 0.295919 0.163082

## nu 0.694720 0.1000870 0.507100 0.691971 0.898616 0.686853Let us compare with the true values:

result_df <- data.frame(

parameter = c("std.dev", "range", "nu"),

true = c(sigma, range, nu),

mean = c(

result_fit$summary.std.dev$mean,

result_fit$summary.range$mean,

result_fit$summary.nu$mean

),

mode = c(

result_fit$summary.std.dev$mode,

result_fit$summary.range$mode,

result_fit$summary.nu$mode

)

)

print(result_df)## parameter true mean mode

## 1 std.dev 1.30 1.3908353 1.3579437

## 2 range 0.15 0.1825964 0.1630818

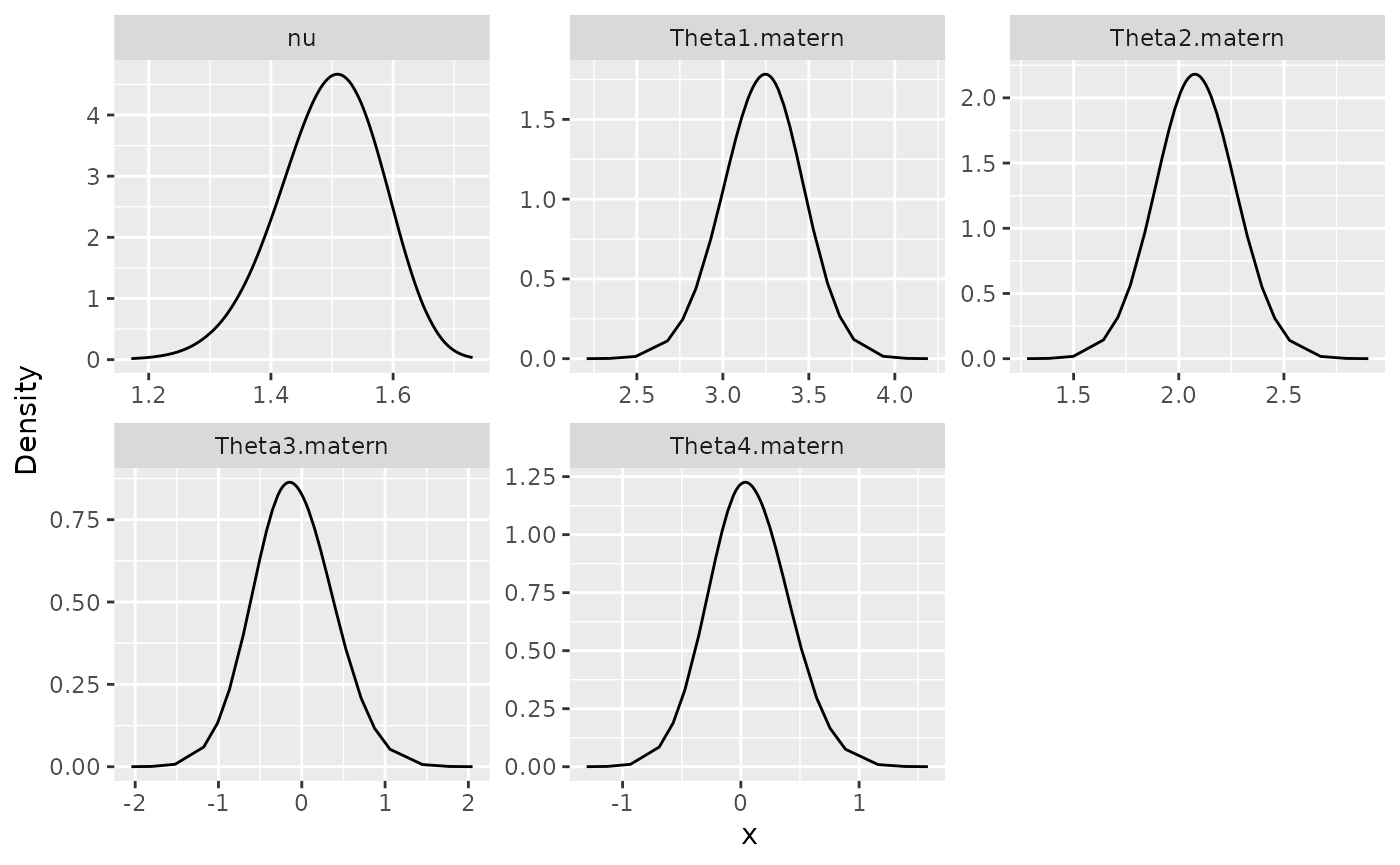

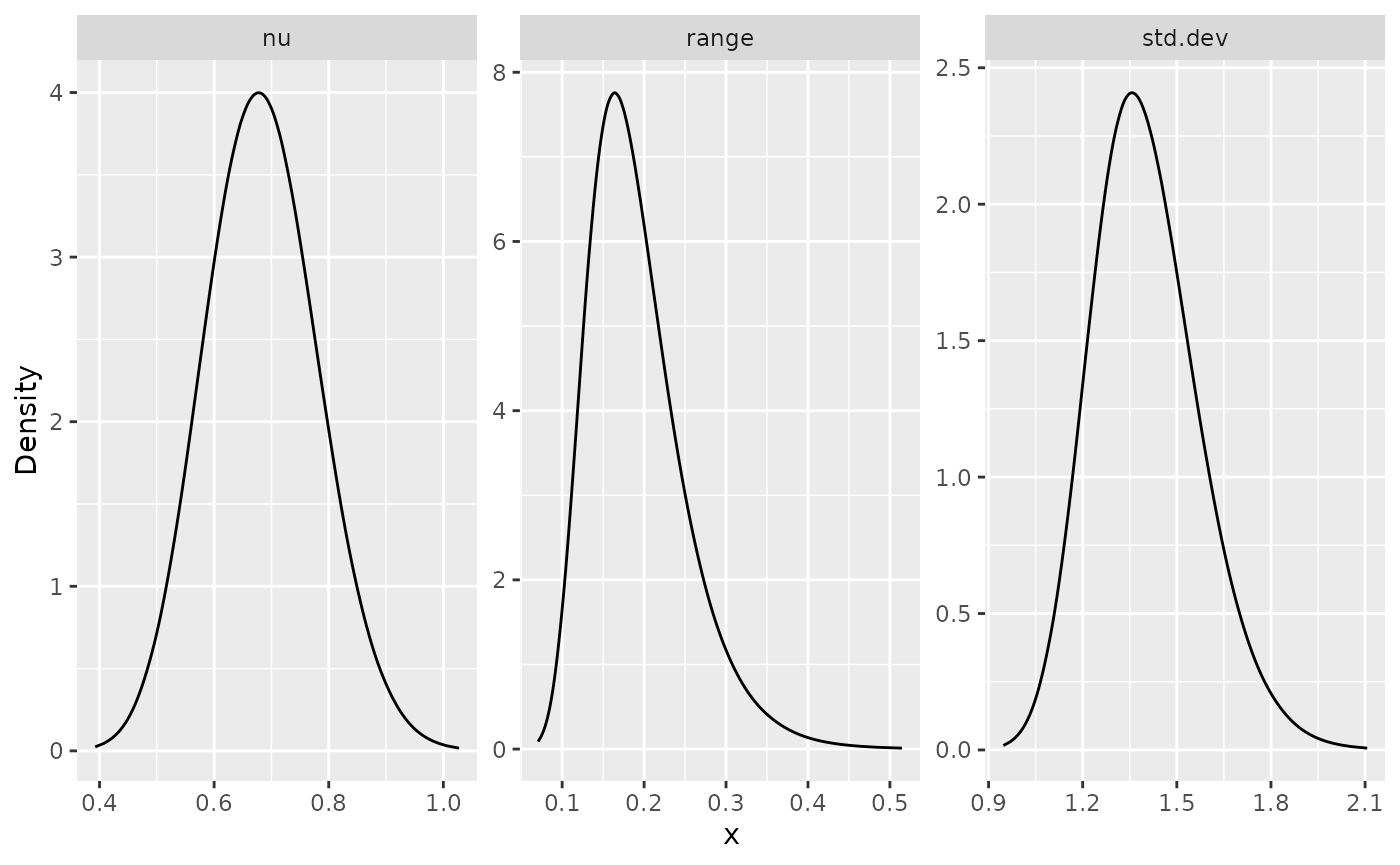

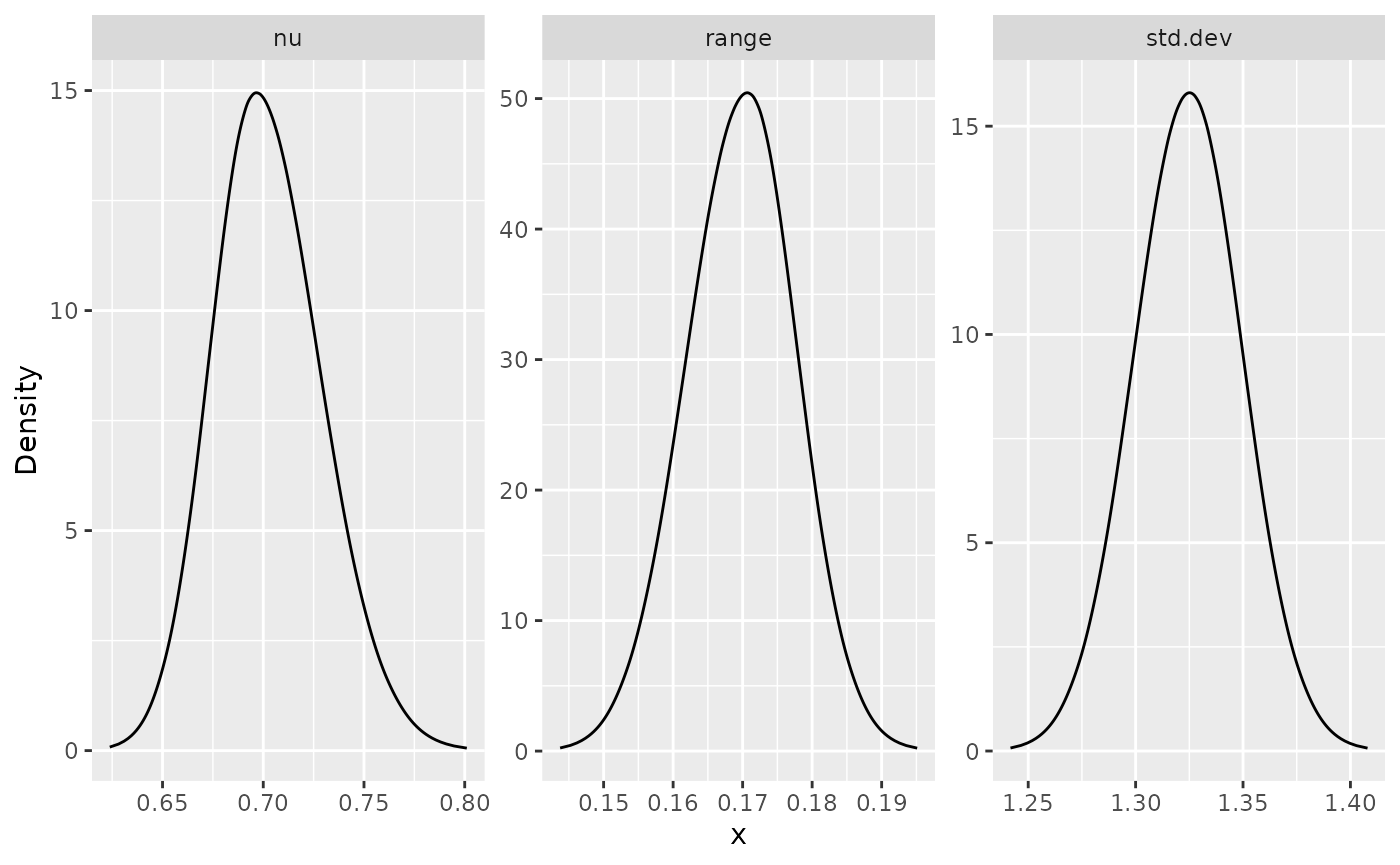

## 3 nu 0.80 0.6947203 0.6868534We can also plot the posterior marginal densities with the help of

the gg_df() function:

posterior_df_fit <- gg_df(result_fit)

library(ggplot2)

ggplot(posterior_df_fit) + geom_line(aes(x = x, y = y)) +

facet_wrap(~parameter, scales = "free") + labs(y = "Density")

Kriging with the R-INLA implementation

We will do kriging on the mesh locations:

pred_loc <- graph$mesh$VtELet us now add the observations for prediction:

graph$add_observations(data = data.frame(y=rep(NA,nrow(pred_loc)),

x1 = graph$mesh$VtE[,1],

x2 = graph$mesh$VtE[,2],

edge_number = pred_loc[,1],

distance_on_edge = pred_loc[,2]),

normalized = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.Let us now create a new model and, then, compute the auxiliary

components at the prediction locations. To this end, we set the argument

only_pred to TRUE, in which it will return the

data.frame containing the NA data.

rspde_model_prd <- rspde.metric_graph(graph)

data_rspde_prd <- graph_data_rspde(rspde_model_prd, only_pred = TRUE)Let us build the prediction stack using the components of

data_rspde_prd and gather it with the estimation stack.

ef.prd <-

list(c(data_rspde_prd[["index"]], list(Intercept = 1)),

list(x1 = data_rspde_prd[["data"]][["x1"]],

x2 = data_rspde_prd[["data"]][["x2"]]))

stk.prd <- inla.stack(

data = data.frame(y = data_rspde_prd[["data"]][["y"]]),

A = list(data_rspde_prd[["basis"]],1), tag = "prd",

effects = ef.prd

)

stk.all <- inla.stack(stk.dat, stk.prd)Let us obtain the predictions:

rspde_fitprd <- inla(f.s,

data = inla.stack.data(stk.all),

control.predictor = list(

A = inla.stack.A(stk.all),

compute = TRUE, link = 1

),

control.compute = list(

return.marginals = FALSE,

return.marginals.predictor = FALSE

),

control.inla = list(int.strategy = "eb"),

num.threads = "1:1"

)Let us now extract the indices of the predicted nodes and store the means:

id.prd <- inla.stack.index(stk.all, "prd")$data

m.prd <- rspde_fitprd$summary.fitted.values$mean[id.prd]Finally, let us plot the predicted values. To this end we will use

the plot_function() graph method.

graph$plot_function(m.prd, type = "plotly") Using R-INLA implementation to fit models with

replicates

Let us begin by cloning the graph and clearing the observations on the cloned graph:

graph_rep <- graph$clone()

graph_rep$clear_observations()We will now add the data with replicates to the graph:

graph_rep$add_observations(data = data.frame(y=as.vector(Y.rep),

edge_number = rep(obs.loc[,1], n.rep),

distance_on_edge = rep(obs.loc[,2], n.rep),

repl = rep(1:n.rep, each = n.obs)),

group = "repl",

normalized = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.Let us create a new rspde model object:

rspde_model_rep <- rspde.metric_graph(graph_rep)To fit the model with replicates we need to create the auxiliary

quantities with the graph_data_rspde() function, where we

set the repl argument in the function

graph_data_spde to .all since we want to use

all replicates:

data_rspde_rep <- graph_data_rspde(rspde_model_rep,

name = "field", repl = ".all",

repl_col = "repl")Let us now create the corresponding inla.stack

object:

st.dat.rep <- inla.stack(

data = data_rspde_rep[["data"]],

A = data_rspde_rep[["basis"]],

effects = data_rspde_rep[["index"]]

)Observe that we need the response variable y to be a

vector. We can now create the formula object, remembering

that since we gave the name argument field, when creating

the index, we need to pass field.repl to the

formula:

f.rep <-

y ~ -1 + f(field,

model = rspde_model_rep,

replicate = field.repl

)We can, finally, fit the model:

rspde_fit_rep <-

inla(f.rep,

data = inla.stack.data(st.dat.rep),

family = "gaussian",

control.predictor =

list(A = inla.stack.A(st.dat.rep)),

num.threads = "1:1"

)We can obtain the estimates in the original scale with the

rspde.result() function:

result_fit_rep <- rspde.result(rspde_fit_rep, "field", rspde_model_rep)

summary(result_fit_rep)## mean sd 0.025quant 0.5quant 0.975quant mode

## std.dev 1.324450 0.02458420 1.276380 1.324420 1.372940 1.324590

## range 0.169532 0.00696801 0.155920 0.169537 0.183269 0.169733

## nu 0.701886 0.02380730 0.657059 0.701126 0.750462 0.698752Let us compare with the true values of the parameters:

result_rep_df <- data.frame(

parameter = c("std.dev", "range", "nu"),

true = c(sigma, range, nu),

mean = c(

result_fit_rep$summary.std.dev$mean,

result_fit_rep$summary.range$mean,

result_fit_rep$summary.nu$mean

),

mode = c(

result_fit_rep$summary.std.dev$mode,

result_fit_rep$summary.range$mode,

result_fit_rep$summary.nu$mode

)

)

print(result_rep_df)## parameter true mean mode

## 1 std.dev 1.30 1.3244530 1.3245936

## 2 range 0.15 0.1695316 0.1697334

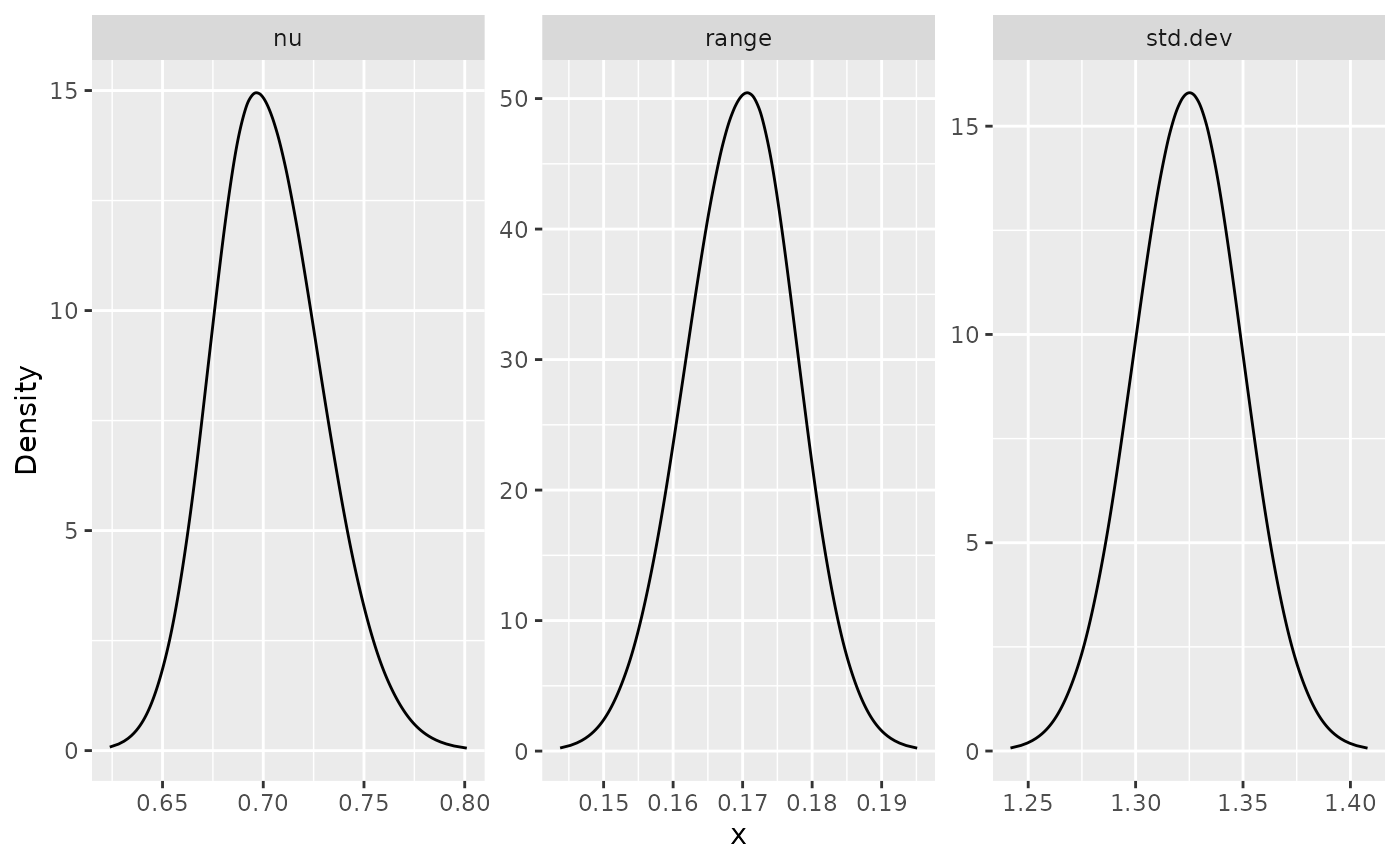

## 3 nu 0.80 0.7018863 0.6987524We can also plot the posterior marginal densities with the help of

the gg_df() function:

posterior_df_fit_rep <- gg_df(result_fit_rep)

ggplot(posterior_df_fit_rep) + geom_line(aes(x = x, y = y)) +

facet_wrap(~parameter, scales = "free") + labs(y = "Density")

Using inlabru implementation

The inlabru package allows us to fit models and do

kriging in a straighforward manner, without having to handle

A matrices, indices nor inla.stack objects.

Therefore, we suggest the reader to use this implementation when using

our implementation to fit real data.

Let us clear the graph, since it contains NA

observations we used for prediction, add the observations again, and

create a new rSPDE model object:

graph$clear_observations()

graph$add_observations(data = df_data,

normalized = TRUE)## Adding observations...## Assuming the observations are normalized by the length of the edge.

rspde_model <- rspde.metric_graph(graph)Let us now load the inlabru package and create the

component (which is inlabru’s formula-like object). Let us

begin by building the auxiliary data to be used with the

graph_data_rspde() function, where we pass the name of the

location variable in the above formula as the loc_name

argument, which in this case is "loc":

data_rspde_bru <- graph_data_rspde(rspde_model, bru = TRUE)Now, we create the component to be used in inlabru, in

which we pass the index element from the

data_rspde_bru object as index locations:

library(inlabru)

cmp <-

y ~ -1 + Intercept(1) + x1 + x2 + field(

cbind(.edge_number, .distance_on_edge),

model = rspde_model

) Now, we can directly fit the model, by using the data

element of data_rspde_bru:

Let us now obtain the estimates of the parameters in the original

scale by using the rspde.result() function:

result_bru_fit <- rspde.result(rspde_bru_fit, "field", rspde_model)

summary(result_bru_fit)## mean sd 0.025quant 0.5quant 0.975quant mode

## std.dev 1.386230 0.1503330 1.120370 1.375620 1.710070 1.351170

## range 0.180585 0.0428815 0.112980 0.174806 0.280500 0.163179

## nu 0.695672 0.0887179 0.527086 0.694099 0.874366 0.692203Let us compare with the true values of the parameters:

result_bru_df <- data.frame(

parameter = c("std.dev", "range", "nu"),

true = c(sigma, range, nu),

mean = c(

result_bru_fit$summary.std.dev$mean,

result_bru_fit$summary.range$mean,

result_bru_fit$summary.nu$mean

),

mode = c(

result_bru_fit$summary.std.dev$mode,

result_bru_fit$summary.range$mode,

result_bru_fit$summary.nu$mode

)

)

print(result_bru_df)## parameter true mean mode

## 1 std.dev 1.30 1.3862267 1.3511702

## 2 range 0.15 0.1805848 0.1631789

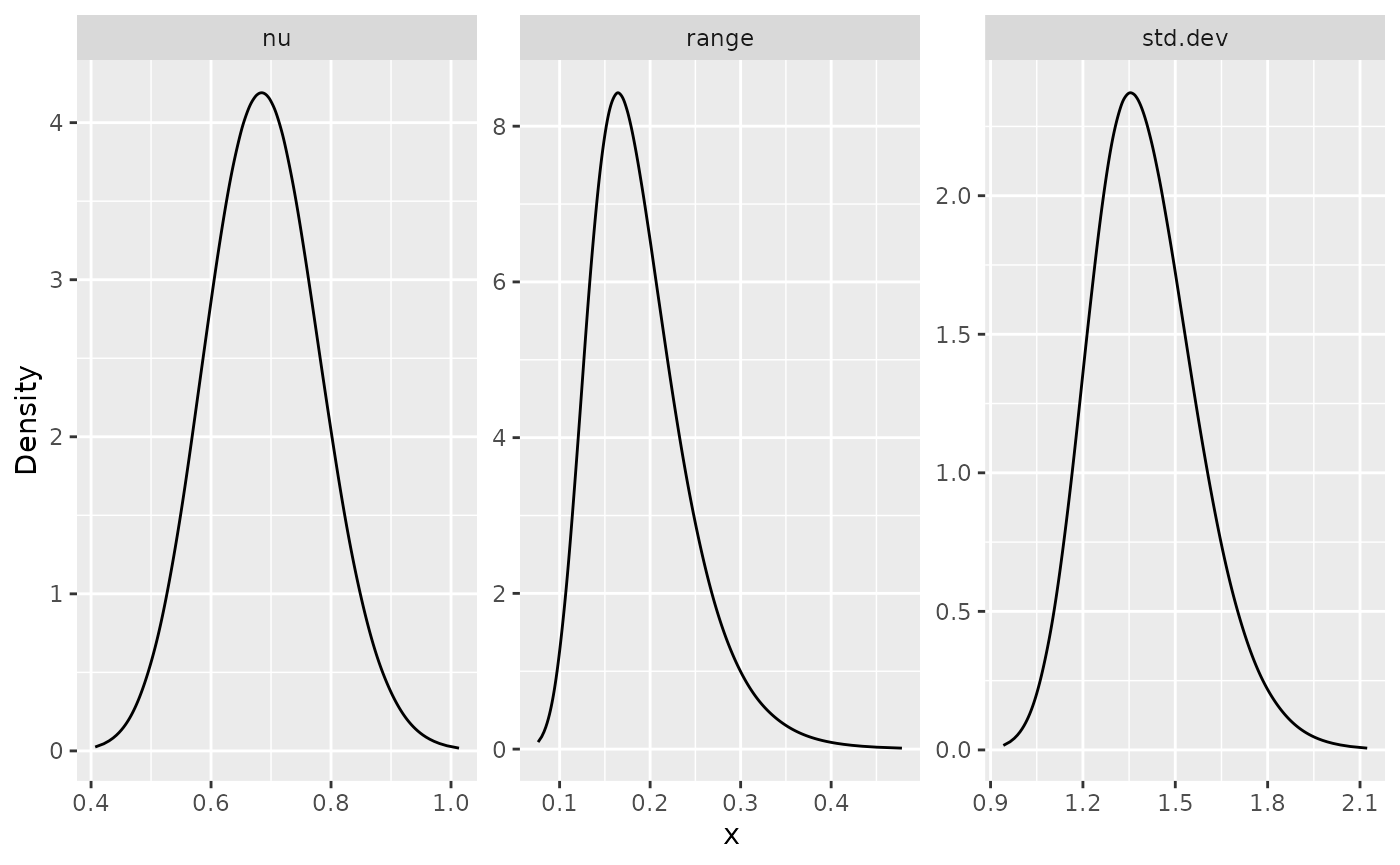

## 3 nu 0.80 0.6956716 0.6922031We can also plot the posterior marginal densities with the help of

the gg_df() function:

posterior_df_bru_fit <- gg_df(result_bru_fit)

ggplot(posterior_df_bru_fit) + geom_line(aes(x = x, y = y)) +

facet_wrap(~parameter, scales = "free") + labs(y = "Density")

Kriging with the inlabru implementation

It is very easy to do kriging with the inlabru

implementation. We simply need to provide the prediction locations to

the predict() method.

In this example we will use the mesh locations. To this end we will

use the get_mesh_locations() method. We also set

bru=TRUE to obtain a data frame suitable to be used with

inlabru. In this case, the mesh locations will be returned

as a data.frame with the location columns

.edge_number and .distance_on_edge. We will,

then, add the covariates x1 and x2 to the data

frame:

prd_loc <- graph$get_mesh_locations(bru = TRUE)

prd_loc[["x1"]] <- prd_loc[,1]

prd_loc[["x2"]] <- prd_loc[,2] Now, we can simply provide these locations to the

predict method along with the fitted object

rspde_bru_fit:

y_pred <- predict(rspde_bru_fit, newdata=prd_loc,

~Intercept + x1 + x2 + field)Let us now prepare the predictions so we can plot them easily by

using the process_rspde_predictions() function:

y_pred <- process_rspde_predictions(y_pred, graph = graph, PtE = prd_loc)Finally, let us plot the predicted values. To this end we will use

the plot() method on y_pred:

plot(y_pred)

We can also create the 3d plot, together with the true data:

p <- graph$plot(data = "y", type = "plotly")

plot(y_pred, type = "plotly", p = p)Using inlabru to fit models with replicates

We can also use our inlabru implementation to fit models

with replicates. We will consider the same data that was generated

above, where the number of replicates is 30.

For this implementation we will use the rspde_model_rep

object.

We can now create the component, passing the vector with the indices

of the replicates as the replicate argument. To obtain the

auxiliary data, we will pass repl argument we use the

function graph_data_rspde(), where we set it to

.all, since we want all replicates. Further, we also set

the argument bru to TRUE.

data_rspde_rep <- graph_data_rspde(rspde_model_rep, repl = ".all",

bru = TRUE, repl_col = "repl")We can now define the bru component formula, passing the

repl as the replicate argument:

cmp_rep <-

y ~ -1 + field(cbind(.edge_number, .distance_on_edge),

model = rspde_model_rep,

replicate = repl)Now, we are ready to fit the model:

rspde_bru_fit_rep <-

bru(cmp_rep,

data=data_rspde_rep[["data"]],

options=list(

family = "gaussian",

num.threads = "1:1")

)We can obtain the estimates in the original scale with the

rspde.result() function:

result_bru_fit_rep <- rspde.result(rspde_bru_fit_rep, "field", rspde_model_rep)

summary(result_bru_fit_rep)## mean sd 0.025quant 0.5quant 0.975quant mode

## std.dev 1.324450 0.02458420 1.276380 1.324420 1.372940 1.324590

## range 0.169532 0.00696801 0.155920 0.169537 0.183269 0.169733

## nu 0.701886 0.02380730 0.657059 0.701126 0.750462 0.698752Let us compare with the true values of the parameters:

result_bru_rep_df <- data.frame(

parameter = c("std.dev", "range", "nu"),

true = c(sigma, range, nu),

mean = c(

result_bru_fit_rep$summary.std.dev$mean,

result_bru_fit_rep$summary.range$mean,

result_bru_fit_rep$summary.nu$mean

),

mode = c(

result_bru_fit_rep$summary.std.dev$mode,

result_bru_fit_rep$summary.range$mode,

result_bru_fit_rep$summary.nu$mode

)

)

print(result_bru_rep_df)## parameter true mean mode

## 1 std.dev 1.30 1.3244530 1.3245936

## 2 range 0.15 0.1695316 0.1697334

## 3 nu 0.80 0.7018863 0.6987524We can also plot the posterior marginal densities with the help of

the gg_df() function:

posterior_df_bru_fit_rep <- gg_df(result_bru_fit_rep)

ggplot(posterior_df_bru_fit_rep) + geom_line(aes(x = x, y = y)) +

facet_wrap(~parameter, scales = "free") + labs(y = "Density")

Let us now do prediction for observations of replicate

10. We start by building the data list with the prediction

locations:

data_prd_repl <- graph$get_mesh_locations(bru = TRUE)

data_prd_repl[["repl"]] <- rep(10, nrow(data_prd_repl))Let us now obtain predictions for this replicate:

y_pred <- predict(rspde_bru_fit_rep,

newdata=data_prd_repl,

~field_eval(cbind(.edge_number, .distance_on_edge),

replicate = repl))Let us now process the predictions:

y_pred <- process_rspde_predictions(y_pred, graph = graph, PtE = data_prd_repl)We can now plot the predictions along with the observed values for

replicate 10:

p <- plot(y_pred, type = "plotly")

graph_rep$plot(data = "y", group = 10, type = "plotly", p = p)An example with a non-stationary model

Our goal now is to show how one can fit model with non-stationary (std. deviation) and non-stationary (a range parameter). One can also use the parameterization in terms of non-stationary SPDE parameters and .

We follow the same structure as INLA. However,

INLA only allows one to specify B.tau and

B.kappa matrices, and, in INLA, if one wants

to parameterize in terms of range and standard deviation one needs to do

it manually. Here we provide the option to directly provide the matrices

B.sigma and B.range.

The usage of the matrices B.tau and B.kappa

are identical to the corresponding ones in

inla.spde2.matern() function. The matrices

B.sigma and B.range work in the same way, but

they parameterize the stardard deviation and range, respectively.

The columns of the B matrices correspond to the same

parameter. The first column does not have any parameter to be estimated,

it is a constant column.

So, for instance, if one wants to share a parameter with both

sigma and range (or with both tau

and kappa), one simply let the corresponding column to be

nonzero on both B.sigma and B.range (or on

B.tau and B.kappa).

Creating the graph and adding data

For this example we will consider the pems data

contained in the MetricGraph package. The data consists of

traffic speed observations on highways in the city of San Jose,

California. The variable y contains the traffic speeds.

pems_graph <- metric_graph$new(edges = pems$edges)

pems_graph$add_observations(data = pems$data)

pems_graph$prune_vertices()

pems_graph$build_mesh(h=0.1)The summary of this graph:

summary(pems_graph)## A metric graph object with:

##

## Vertices:

## Total: 347

## Degree 1: 11; Degree 2: 16; Degree 3: 315; Degree 4: 5;

## With incompatible directions: 17

##

## Edges:

## Total: 504

## Lengths:

## Min: 0.01040218 ; Max: 7.677232 ; Total: 470.7559

## Weights:

## Columns: .weights

## That are circles: 0

##

## Graph units:

## Vertices unit: degree ; Lengths unit: km

##

## Longitude and Latitude coordinates: TRUE

## Which spatial package: sp

## CRS: +proj=longlat +datum=WGS84 +no_defs

##

## Some characteristics of the graph:

## Connected: TRUE

## Has loops: FALSE

## Has multiple edges: TRUE

## Is a tree: FALSE

## Distance consistent: FALSE

## Has Euclidean edges: FALSE

##

## Computed quantities inside the graph:

## Laplacian: FALSE ; Geodesic distances: TRUE

## Resistance distances: FALSE ; Finite element matrices: FALSE

##

## Mesh:

## Max h_e: 0.09998277 ; Min n_e: 0

##

## Data:

## Columns: y

## Groups: .group

##

## Tolerances:

## vertex-vertex: 0.001

## vertex-edge: 0.001

## edge-edge: 0Observe that it is a non-Euclidean graph.

Let us create as non-stationary covariates, the position on the edge, which will capture if the traffic speed was taken close to the intersections. We will make this function symmetric around 0.5 by subtracting 0.5 for points larger than 0.5. That is, the covariate is zero close to intersections.

cov_pos <- 2 * ifelse(pems_graph$mesh$VtE[,2] > 0.5,

1-pems_graph$mesh$VtE[,2],

pems_graph$mesh$VtE[,2])We will now build the non-stationary matrices to be used:

Let us also obtain the same covariate for the observations:

cov_obs <- pems$data[[".distance_on_edge"]]

cov_obs <- 2 * ifelse(cov_obs > 0.5,

1 - cov_obs,

cov_obs)Let add this covariate to the data:

pems_graph$add_observations(data = pems_graph$mutate(cov_obs = cov_obs),

clear_obs = TRUE)## Adding observations...## Assuming the observations are NOT normalized by the length of the edge.## The unit for edge lengths is km## The current tolerance for removing distant observations is (in km): 3.83861599015656Fitting the model with graph_lme

We are now in position to fit this model using the

graph_lme() function. We will also add cov_obs

as a covariate for the model.

fit <- graph_lme(y ~ cov_obs, graph = pems_graph, model = list(type = "WhittleMatern",

B.sigma = B.sigma, B.range = B.range, fem = TRUE))Let us now obtain a summary of the fitted model:

summary(fit)##

## Latent model - Generalized Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ cov_obs, graph = pems_graph, model = list(type = "WhittleMatern",

## B.sigma = B.sigma, B.range = B.range, fem = TRUE))

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 41.340 9.702 4.261 2.04e-05 ***

## cov_obs 4.517 1.749 2.583 0.00979 **

##

## Random effects:

## Estimate Std.error z-value

## nu 2.0027 0.9125 2.195

## theta1 2.6706 NaN NaN

## theta2 1.8666 0.2694 6.928

## theta3 1.1420 NaN NaN

## theta4 0.6641 NaN NaN

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 7.036 0.364 19.33

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -1210.702

## Number of function calls by 'optim' = 501

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 3.37042 minsLet us plot the range parameter along the mesh, so we can see how it is varying:

est_range <- exp(B.range[,-1]%*%fit$coeff$random_effects[2:5])

pems_graph$plot_function(X = est_range, vertex_size = 0,

type = "mapview", mapview_caption = "Range")Similarly, we have for sigma:

est_sigma <- exp(B.sigma[,-1]%*%fit$coeff$random_effects[2:5])

pems_graph$plot_function(X = est_sigma, vertex_size = 0,

type = "mapview", mapview_caption = "Sigma")Our goal now is to plot the estimated marginal standard deviation of

this model. To this end, we start by creating the non-stationary Matérn

operator using the rSPDE package:

rspde_object_ns <- rSPDE::spde.matern.operators(graph = pems_graph,

parameterization = "matern",

B.sigma = B.sigma,

B.range = B.range,

theta = fit$coeff$random_effects[2:5],

nu = fit$coeff$random_effects[1] - 0.5)Now, we compute the estimated marginal standard deviation:

est_cov_matrix <- rspde_object_ns$covariance_mesh()

est_std_dev <- sqrt(Matrix::diag(est_cov_matrix))We can now plot:

pems_graph$plot_function(X = est_std_dev, vertex_size = 0,

type = "mapview", mapview_caption = "Std. dev")Fixing parameters in non-stationary models

In non-stationary models, the parameters are labeled as theta1,

theta2, etc., corresponding to the coefficients in the B matrices.

Similar to the stationary case, we can fix individual parameters or set

starting values, but these options must be set in the

model_options list argument using fix_theta1,

fix_theta2, etc., or start_theta1,

start_theta2, etc.

For example, if we want to fix the first coefficient theta1 to 0 in the previous example:

# Fit model with fixed theta1 parameter

fit_ns_fixed_theta1 <- graph_lme(y ~ cov_obs,

graph = pems_graph,

model = list(type = "WhittleMatern",

B.sigma = B.sigma,

B.range = B.range,

fem = TRUE),

model_options = list(fix_theta1 = 0.5)) # Fix theta1 to 0.5

summary(fit_ns_fixed_theta1)##

## Latent model - Generalized Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ cov_obs, graph = pems_graph, model = list(type = "WhittleMatern",

## B.sigma = B.sigma, B.range = B.range, fem = TRUE), model_options = list(fix_theta1 = 0.5))

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 49.308 1.490 33.10 <2e-16 ***

## cov_obs 2.686 2.148 1.25 0.211

##

## Random effects:

## Estimate Std.error z-value

## nu 2.0534 0.0131 156.705

## theta1 (fixed) 0.5000 NA NA

## theta2 0.7319 0.1503 4.869

## theta3 4.4240 0.4069 10.872

## theta4 2.0788 0.2450 8.487

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 9.4917 0.6508 14.59

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -1264.763

## Number of function calls by 'optim' = 27

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 2.66844 minsWe can also fix the entire theta vector at once using the

fix_theta parameter. This is particularly useful when we

have strong prior knowledge about all the parameters:

# Fit model with all theta parameters fixed

fit_ns_fixed_all <- graph_lme(y ~ cov_obs,

graph = pems_graph,

model = list(type = "WhittleMatern",

B.sigma = B.sigma,

B.range = B.range,

fem = TRUE),

model_options = list(fix_theta = c(0.5, 0.8, 1.2, 0.3))) # Fix the entire theta vector## Warning in rSPDE::rspde_lme(formula = formula, loc =

## cbind(df_data[[".edge_number"]], : All optimization methods failed to provide a

## numerically positive-definite Hessian. The optimization method with largest

## likelihood was chosen. You can try to obtain a positive-definite Hessian by

## setting 'improve_hessian' to TRUE.

summary(fit_ns_fixed_all)##

## Latent model - Generalized Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ cov_obs, graph = pems_graph, model = list(type = "WhittleMatern",

## B.sigma = B.sigma, B.range = B.range, fem = TRUE), model_options = list(fix_theta = c(0.5,

## 0.8, 1.2, 0.3)))

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 49.574 NA NA NA

## cov_obs 2.325 NA NA NA

##

## Random effects:

## Estimate Std.error z-value

## nu 3.998 NA NA

## theta1 (fixed) 0.500 NA NA

## theta2 (fixed) 0.800 NA NA

## theta3 (fixed) 1.200 NA NA

## theta4 (fixed) 0.300 NA NA

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 13.74 NA NA

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -1327.07

## Number of function calls by 'optim' = 501

## Optimization method used in 'optim' = L-BFGS-B

##

## Time used to: fit the model = 2.54464 minsSimilarly, we can provide starting values for the entire theta vector

with start_theta:

# Fit model with starting values for theta parameters

fit_ns_start <- graph_lme(y ~ cov_obs,

graph = pems_graph,

model = list(type = "WhittleMatern",

B.sigma = B.sigma,

B.range = B.range,

fem = TRUE),

model_options = list(start_theta = c(0.4, 0.7, 1.0, 0.2))) # Starting values for theta vector## Warning in rSPDE::rspde_lme(formula = formula, loc =

## cbind(df_data[[".edge_number"]], : optim method L-BFGS-B failed to provide a

## positive-definite Hessian. Another optimization method was used.

summary(fit_ns_start)##

## Latent model - Generalized Whittle-Matern

##

## Call:

## graph_lme(formula = y ~ cov_obs, graph = pems_graph, model = list(type = "WhittleMatern",

## B.sigma = B.sigma, B.range = B.range, fem = TRUE), model_options = list(start_theta = c(0.4,

## 0.7, 1, 0.2)))

##

## Fixed effects:

## Estimate Std.error z-value Pr(>|z|)

## (Intercept) 46.007 5.889 7.812 5.61e-15 ***

## cov_obs 2.284 1.933 1.182 0.237

##

## Random effects:

## Estimate Std.error z-value

## nu 0.126500 0.001464 86.404

## theta1 3.279336 0.195212 16.799

## theta2 2.556036 1.709524 1.495

## theta3 -0.221250 0.211386 -1.047

## theta4 0.572628 1.721597 0.333

##

## Measurement error:

## Estimate Std.error z-value

## std. dev 6.8814 0.4118 16.71

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Log-Likelihood: -1225.685

## Number of function calls by 'optim' = 501

## Optimization method used in 'optim' = Nelder-Mead

##

## Time used to: fit the model = 3.27735 minsFitting the inlabru rSPDE model

Let us then fit the same model using inlabru now. We

start by defing the rSPDE model with the

rspde.metric_graph() function:

rspde_model_nonstat <- rspde.metric_graph(pems_graph,

B.sigma = B.sigma,

B.range = B.range,

parameterization = "matern") Let us now create the data.frame() and the vector with

the replicates indexes:

data_rspde_bru_ns <- graph_data_rspde(rspde_model_nonstat, bru = TRUE)Let us create the component and fit.

cmp_nonstat <-

y ~ -1 + Intercept(1) + cov_obs + field(

cbind(.edge_number, .distance_on_edge),

model = rspde_model_nonstat

)

rspde_fit_nonstat <-

bru(cmp_nonstat,

data = data_rspde_bru_ns[["data"]],

family = "gaussian",

options = list(num.threads = "1:1")

)We can get the summary:

summary(rspde_fit_nonstat)## inlabru version: 2.12.0

## INLA version: 25.03.13

## Components:

## Intercept: main = linear(1), group = exchangeable(1L), replicate = iid(1L), NULL

## cov_obs: main = linear(cov_obs), group = exchangeable(1L), replicate = iid(1L), NULL

## field: main = cgeneric(cbind(.edge_number, .distance_on_edge)), group = exchangeable(1L), replicate = iid(1L), NULL

## Likelihoods:

## Family: 'gaussian'

## Tag: ''

## Data class: 'metric_graph_data', 'data.frame'

## Response class: 'numeric'

## Predictor: y ~ .

## Used components: effects[Intercept, cov_obs, field], latent[]

## Time used:

## Pre = 1.92, Running = 12.7, Post = 0.342, Total = 14.9

## Fixed effects:

## mean sd 0.025quant 0.5quant 0.975quant mode kld

## Intercept 49.730 2.927 43.896 49.742 55.498 49.741 0

## cov_obs 2.409 1.753 -1.032 2.410 5.846 2.410 0

##

## Random effects:

## Name Model

## field CGeneric

##

## Model hyperparameters:

## mean sd 0.025quant 0.5quant

## Precision for the Gaussian observations 0.020 0.002 0.016 0.020

## Theta1 for field 3.458 0.540 2.450 3.441

## Theta2 for field 2.211 0.350 1.553 2.201

## Theta3 for field -0.552 0.920 -2.447 -0.523

## Theta4 for field -0.226 0.648 -1.559 -0.207

## Theta5 for field 1.167 0.678 -0.082 1.140

## 0.975quant mode

## Precision for the Gaussian observations 0.024 0.020

## Theta1 for field 4.572 3.362

## Theta2 for field 2.928 2.158

## Theta3 for field 1.172 -0.396

## Theta4 for field 0.988 -0.119

## Theta5 for field 2.582 1.014

##

## Deviance Information Criterion (DIC) ...............: 2302.45

## Deviance Information Criterion (DIC, saturated) ....: 428.69

## Effective number of parameters .....................: 102.72

##

## Watanabe-Akaike information criterion (WAIC) ...: 2295.17

## Effective number of parameters .................: 78.35

##

## Marginal log-Likelihood: -1240.58

## is computed

## Posterior summaries for the linear predictor and the fitted values are computed

## (Posterior marginals needs also 'control.compute=list(return.marginals.predictor=TRUE)')We can obtain outputs with respect to parameters in the original

scale by using the function rspde.result():

result_fit_nonstat <- rspde.result(rspde_fit_nonstat, "field", rspde_model_nonstat)

summary(result_fit_nonstat)## mean sd 0.025quant 0.5quant 0.975quant mode

## Theta1.matern 3.458340 0.539699 2.449990 3.441030 4.571940 3.362370

## Theta2.matern 2.211230 0.349542 1.553260 2.201460 2.928300 2.157550

## Theta3.matern -0.551695 0.920372 -2.446950 -0.523498 1.172430 -0.395833

## Theta4.matern -0.226348 0.647796 -1.559260 -0.206865 0.988389 -0.118779

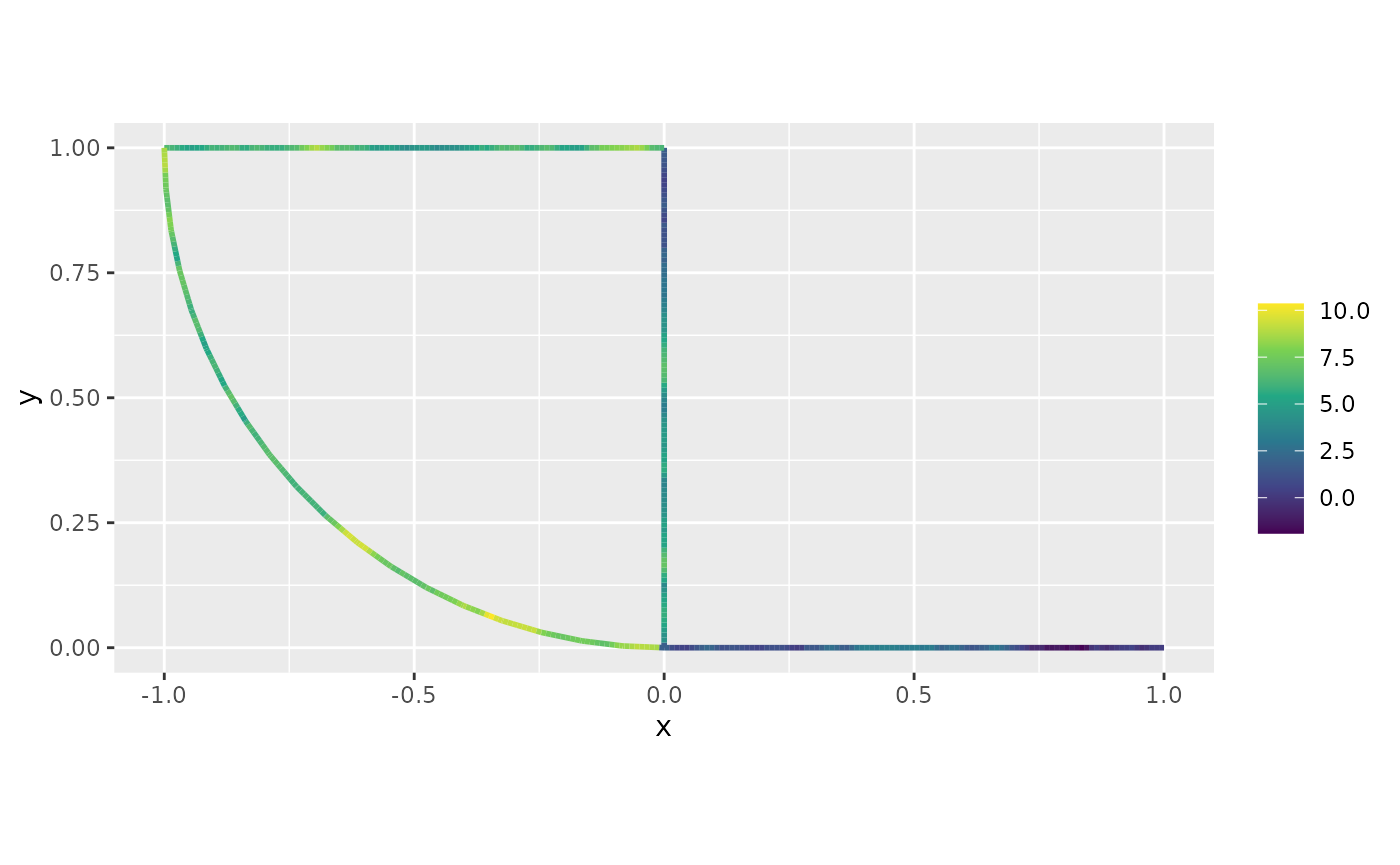

## nu 1.485920 0.235739 0.963846 1.512010 1.857510 1.593250We can also plot the posterior densities. To this end we will use the

gg_df() function, which creates ggplot2

user-friendly data frames:

posterior_df_fit <- gg_df(result_fit_nonstat)

ggplot(posterior_df_fit) + geom_line(aes(x = x, y = y)) +

facet_wrap(~parameter, scales = "free") + labs(y = "Density")